Courtesy of leonardo.ai website. Photo credit: Daniel Twum

Building a Better RAG: The DeepSeek Blueprint

In today’s fast-paced world, efficiently processing and summarizing meeting recordings is crucial for productivity. By integrating DeepSeek’s reinforcement learning (RL) techniques into a personal Retrieval-Augmented Generation (RAG) workflow, you can significantly enhance the quality and accuracy of your meeting summaries. Here’s how you can apply this approach:

Workflow Overview:

- Chunking Meeting Recordings – Start by splitting your .wav meeting recording into manageable 60-minute chunks.

- Loading into NotebookLM – Load each chunk into NotebookLM (notebooklm.google.com) to generate an initial detailed summary (output-1).

- Editing and Refining – Use Google Docs (docs.google.com) to refine output-1, ensuring clarity and accuracy, and save it as output2.pdf.

- Re-processing with NotebookLM – Reload output2.pdf into NotebookLM to generate a more refined summary (output 2).

- Editing and Refining” – Use Google Docs (docs.google.com) to refine output-2.pdf, ensuring clarity and accuracy, and save it as output3.pdf

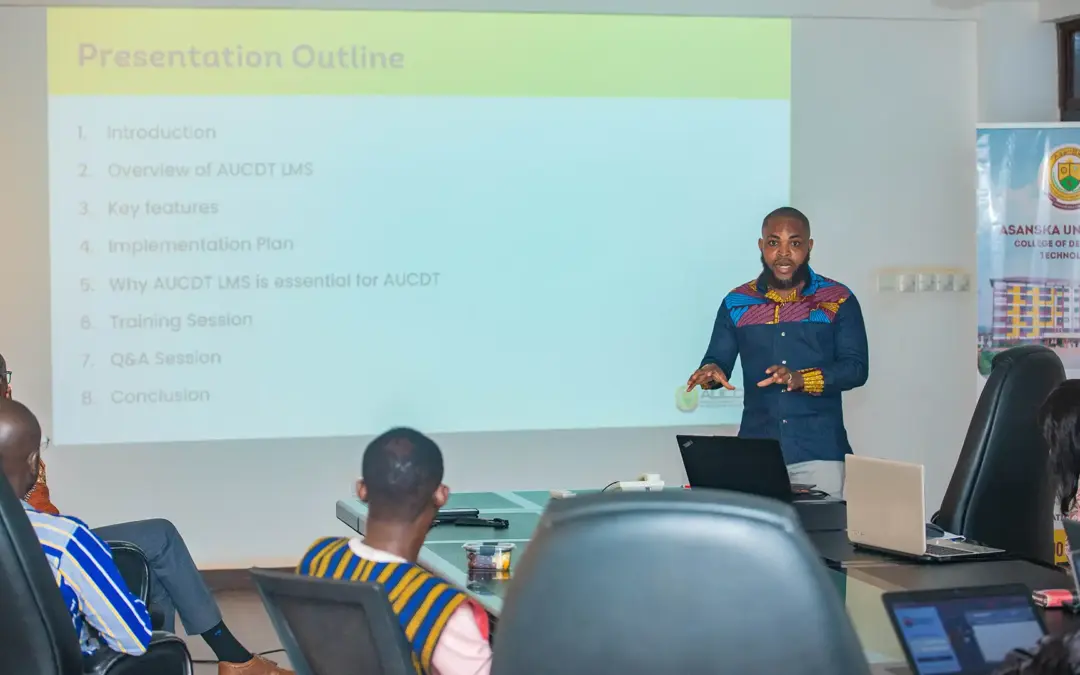

Workflow Overview

The little things matter

Chunking Meeting Recordings: start by splitting your audio files into 60 minute .mp3 chunks.

Made with Care

Load .mp3 chunks into notebooklm.google.com to generate output-1.pdf file.

Please provide the detailed minutes

based on the provided agenda and

the sources.

DeepSeek Reinforcement Learning Integration:

DeepSeek’s RL techniques can be applied to this workflow to iteratively improve the quality of your summaries. Here’s how:

Small-Scale RL Application After generating output-1, use DeepSeek’s RL algorithms to evaluate and adjust the summarization process. This involves:

- Reward Mechanism Define a reward function based on summary accuracy, relevance, and coherence.

- Policy Optimization Adjust the summarization model’s parameters to maximize the reward, ensuring better performance in subsequent iterations.

- Feedback Loop Use the refined output2.pdf as a new input, allowing the RL model to learn from previous iterations and further enhance the summary quality.

- The final joy: feed output3.pdf back into notebooklm and use the prompt:

Please provide the detailed minutes based on the provided

meeting agenda and the sources

Courtesy of meta.ai Photo credit: Daniel Twum

Benefits

- Improved Accuracy RL techniques help in fine-tuning the summarization process, leading to more accurate and relevant summaries.

- Iterative Refinement Each iteration of the workflow benefits from the learning process, continuously improving the output quality.

- Scalability While this example is small-scale, the principles can be scaled to larger datasets and more complex workflows.

By leveraging DeepSeek’s reinforcement learning techniques, you can transform your personal RAG workflow into a dynamic, self-improving system, ensuring that your meeting summaries are always of the highest quality.

Courtesy of leonardo.ai website. Photo credit: Daniel Twum

We invite you to join our growing community of AI enthusiasts. Visit our studio, attend a workshop, or explore our collection online. Follow us on social media to stay updated on our latest creations, upcoming events, and special promotions. Thank you for being a part of our story. Let’s create something beautiful together. Learn more

Share & Follow

For any inquiries, please contact author: